This piece, the third in our four-part series on best demonstrated research practices, explores the prevalence and impact that fraudulent responders have on data quality.

VeraQuest understands the value of fast, inexpensive research in today’s marketing research environment, which is why our business was built with speed and cost in mind. However, we also recognize the critical importance best-demonstrated practices play in ensuring quality results.

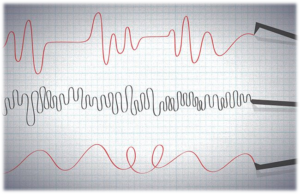

We use the term “fraudulent responder” to loosely describe any number of offenders: straight-liners and speeders, imposters, and bots – the insidious programs that troll the Internet impersonating humans. We also add thoughtless responders into the overall mix of fraudulent responders. Thoughtless responders often (though not always) begin a survey by answering thoughtfully, but lose interest and start selecting responses aimlessly. By some accounts, including our own, as much as 15% to 20% of respondents in a given study may participate in fraudulent or thoughtless behavior. These figures are drawn from a research-on-research study we conducted and which was published in Quirks in 2014 as well as another more recently conducted study by Elevated Research. And though one can ponder the motivations behind fraudulent and thoughtless respondents, the underlying reasons, while perhaps interesting from a sociologic and perhaps academic perspective, are essentially moot for those of us that deal with the implications of bad data in our surveys.

We use the term “fraudulent responder” to loosely describe any number of offenders: straight-liners and speeders, imposters, and bots – the insidious programs that troll the Internet impersonating humans. We also add thoughtless responders into the overall mix of fraudulent responders. Thoughtless responders often (though not always) begin a survey by answering thoughtfully, but lose interest and start selecting responses aimlessly. By some accounts, including our own, as much as 15% to 20% of respondents in a given study may participate in fraudulent or thoughtless behavior. These figures are drawn from a research-on-research study we conducted and which was published in Quirks in 2014 as well as another more recently conducted study by Elevated Research. And though one can ponder the motivations behind fraudulent and thoughtless respondents, the underlying reasons, while perhaps interesting from a sociologic and perhaps academic perspective, are essentially moot for those of us that deal with the implications of bad data in our surveys.

In terms of bots, most reputable sample companies do what they can to eliminate them, such as implementing speeding algorithms that detect when questions are being answered too quickly, but these automated programs continue to evolve and are becoming harder to detect. Panel companies and programming facilities can only do so much. Most of the responsibility for identifying and eliminating fraudulent responders needs to be shouldered by whomever designs the questionnaire.

It’s my experience that clients avoid thinking about the impact of fraudulent and thoughtless responders because it’s disturbing and they perceive they lack the control to fix it. While we agree it’s disturbing, researchers can control the situation by being selective in who designs and administers their surveys. In addition to speeder algorithms, there are a variety of cheater questions researchers can employ to catch fraudulent or thoughtless respondents. Beyond cheater questions (ours are proprietary), we recommend scrutinizing responses to open-ended questions. There, we often find an abundance of clues as to who is being thoughtful and who is not. We often see at least some stock answers in the open ends such as “excellent” or “strongly agree” that are unrelated to the question and therefore are highly indicative of someone who has experience ploughing through the survey with minimal effort. We also usually see some gibberish such “ksjfjkkj” which is virtually an automatic disqualification for us. If they care so little about answering the open-ends, how much stock can we take about their answers to the rest of the survey?

The implementation of systems that can identify and eliminate fraudulent and thoughtless responders is not without some challenges. While some responders can be caught during the data collection process, some of the nitty gritty work can only be done after data collection is completed, thereby potentially effecting sampling quotas and weighting procedures. Implementation can slow down the turn-around time somewhat and the cheater questions can add to the overall survey length and thus cost. However, the question we must ask ourselves is as follows: are we willing to accept that there’s a good chance 15% – 20% of our data will be incorrect, or do what’s necessary to ensure we get reliable results on which we base important decisions.

Leave a Reply