The first of our four-part series on best demonstrated research practices explores the relationship between quota sampling and weighting and how to use both to efficiently maximize a study’s data quality.

VeraQuest understands the value of fast, inexpensive research in today’s marketing research environment, which is why our system was built with speed and cost in mind. However, we also recognize the critical importance that best-demonstrated practices play in ensuring quality results.

In research, we often hear the term “representative” with respect to sample. That’s because when we want to understand our customers, it’s best when our sampling frame closely matches the universe of interest. Put another way, the closer our sample comes to approximating our target audience, the more confidence we have the findings from our research will reflect the real world. Therefore, to make the sample look like the universe, we identify quota targets and send out survey invitations in the hopes of getting respondents who reflect reality.

In research, we often hear the term “representative” with respect to sample. That’s because when we want to understand our customers, it’s best when our sampling frame closely matches the universe of interest. Put another way, the closer our sample comes to approximating our target audience, the more confidence we have the findings from our research will reflect the real world. Therefore, to make the sample look like the universe, we identify quota targets and send out survey invitations in the hopes of getting respondents who reflect reality.

Since filling each quota cell exactly is neither practical from a time perspective nor viable economically, good research firms judiciously apply weighting factors that raise or lower the relative importance of each respondent. We can think of it as “fine-tuning” the sample. Weighting allows respondents who are under- or over-represented in the sample to assume their correct level of importance so our data accurately reflects the universe being studied.

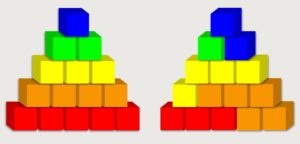

Weighting, however, has its limitations. It’s most effective when differences between quota targets and the actual sample are relatively small. When the differences become large, the weighting factors become too great and can cause the data to become distorted. In essence, quota sampling should get us 95% of the way to where we want to be and weighting the final 5%. Therefore, it’s always best to meet quota sampling requirements as much as possible, so only minimum weights need to be applied.

The way to tell if our weighting scheme has become problematic can be found in a measure called “weighting efficiency”. Weighting efficiency is used to refer to the impact that weighting has on our data. Weighting efficiencies of 90%+ are great, 80% – 89% good, 70% – 79% okay at best. Once the weighting efficiency drops below 70%, it diminishes our ability to accurately draw inferences from the data. Lower weighting efficiencies have the same effect as reducing sample size. In other words, the extra 500 respondents you just spent $1500 for in order to boost your sample from n = 1,000 to 1,500 may be for naught if the impact of over-weighting is to lower the “effective” sample back to n = 1000.

There are two ways to minimize the effect of over-weighting. The first way is to simplify the quota requirements. The second is to do an effective job meeting the requirements. While three-and sometimes four-dimension quota sampling plans are typically the norm, we use a more rigorous six-dimension plan as it provides for greater overall representativeness. To us, going to fewer dimensions is like admitting we won’t score well on an exam and therefore, we’re going to make the exam easier. While that may be a reasonable strategy for achieving a high weighting efficiency, it’s not an effective recipe for generating high quality results.

A survey’s actual sample will rarely match the population exactly; however, a robust sampling plan coupled with the prudent use of weighting can enable the researcher to generate data that closely approximates the universe while minimizing the likelihood of over-spending on sample.

Please watch for our upcoming piece on the art of using images in surveys.

Leave a Reply